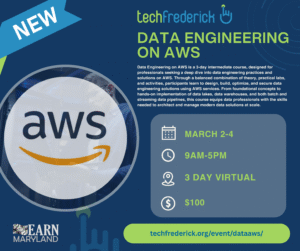

Data Engineering on AWS

Date: March 2nd, 3rd, 4th

Time: 9:00 AM – 5:00 PM

Location: Virtual

Level: Intermediate

Cost: $100

About the Course

Data Engineering on AWS is a 3-day intermediate course, designed for professionals seeking a deep dive

into data engineering practices and solutions on AWS. Through a balanced combination of theory,

practical labs, and activities, participants learn to design, build, optimize, and secure data engineering

solutions using AWS services. From foundational concepts to hands-on implementation of data lakes,

data warehouses, and both batch and streaming data pipelines, this course equips data professionals

with the skills needed to architect and manage modern data solutions at scale.

Course Objectives

- Understand the foundational roles and key concepts of data engineering, including data

personas, data discovery, and relevant AWS services. - Identify and explain the various AWS tools and services crucial for data engineering,

encompassing orchestration, security, monitoring, CI/CD, IaC, networking, and cost optimization. - Design and implement a data lake solution on AWS, including storage, data ingestion,

transformation, and serving data for consumption. - Optimize and secure a data lake solution by implementing open table formats, security measures,

and troubleshooting common issues. - Design and set up a data warehouse using Amazon Redshift Serverless, understanding its

architecture, data ingestion, processing, and serving capabilities. - Apply performance optimization techniques to data warehouses in Amazon Redshift, including

monitoring, data optimization, query optimization, and orchestration. - Manage security and access control for data warehouses in Amazon Redshift, understanding

authentication, data security, auditing, and compliance. - Design effective batch data pipelines using appropriate AWS services for processing and

transforming data. - Implement comprehensive strategies for batch data pipelines, covering data processing,

transformation, integration, cataloging, and serving data for consumption. - Optimize, orchestrate, and secure batch data pipelines, demonstrating advanced skills in data

processing automation and security. - Architect streaming data pipelines, understanding various use cases, ingestion, storage,

processing, and analysis using AWS services. - Optimize and secure streaming data solutions, including compliance considerations and access

control.

Who Should Attend

This intermediate-level course is designed for professionals who want to design, build, optimize, and secure data engineering solutions using AWS.

REGISTER NOW!